Lots of very intelligent people are putting lots of effort into mapping the brain’s networks. People are calling these sort of maps of which-neuron-is-connected-to-which-neuron ‘connectomes‘, and if you’re working on this stuff, you’re doing ‘connectomics‘. (Academics love coining new fields of study! Seems like there’s a new type of ‘omics’ every month[1]. Here’s a cheatsheet courtesy of Wikipedia– though I can’t vouch for the last on the list.)

Mapping the connectome is a great step toward understanding the brain. The problem is, what do we do with a connectome once it’s built? There’s a lot of important information about the brain’s connectivity packed into a connectome, but how do we extract it? Read on for an approach to broad-stroke, comparative brain region analysis based on frequency normalization. (Fairly technical and not recommended for a general audience.)

The challenge: turning connectomics data into knowledge.

There’s a worm called c. elegans that scientists study a lot because it’s so simple– its whole body has only 302 neurons. We’ve made a lot of progress on understanding it, but we’re still working on figuring out what each neuron does and mapping the logic of its neural circuits. With the human brain having a hundred billion neurons, it’s clear that we need some big-picture tools to make sense of all the wonderfully combinatorial complexity in our brains.

It’s not an easy task: what sort of analytical lens can we use to study the brain’s neural networks that elegantly illuminates system properties and differences between people, simplifying without being too simplistic? What sort of analysis ‘carves our brains at the proverbial joints’, to paraphrase Aristotle?

A thought: frequency is the internal language of the brain.

The brain is horrendously emergently complex— but all the really interesting signal encoding and processing in the brain is frequency based. Most likely, the texture of our cognition and emotion derive from the high-level frequency dynamics in our brains. Even more strongly and less vaguely, I’d argue that most other elements of our neuro ontology (neurotransmitters, action potentials, fMRI activity, etc) are functionally important only insofar as they influence, or are proxies for, the frequency dynamics of the brain.

It’s a strong statement! But I think it’s warranted. Following this, if we want to understand neuron activity on large scales we’ll eventually need to enable comparative frequency analysis. To normalize neural networks somehow to allow apples-to-apples frequency comparisons between neural circuits or brain regions or people.

An approach: can we construct a normalized framework for analyzing neural networks by flattening them into a one-dimensional bundle of interconnected pathways?

In the previous section I said we’ll need to enable comparative frequency analysis to really understand and predict neuron activity on a large scale. But this is a very hard problem. It’s hard because frequency analysis (e.g., performing a fourier transform) depends on a certain sort of structural consistency in the medium under measurement. There’s a difference in kind between sound waves bouncing around a room and electrical pulses bouncing through a neural network. They’re both based on frequency, but neural networks have complex topology and state*, whereas sound does not. If we want to analyze network frequencies on scales larger than individual neurons (which do not shed much light on overall frequency dynamics), and particularly if we want to apply non-trivial amounts of audio terminology (which seems like a no-brainer to me), we need to control for these differences.

Leaving aside the question of internal state of neurons for now, I have a suggestion on taming the topological issue such that we could attempt structure-normalized, apples-to-apples comparisons of high-level frequency dynamics between neural circuits or brain regions or people.

In short, we could try to computationally flatten out the pathways of a neural network (e.g., a brain region’s connectome) into a one-dimensional bundle of interconnected pathways. Essentially it’d involve trying to flatten the network into a semi-redundant, 1d, massively parallel, linear dataset, of which we could take cross-sections to measure frequency profiles, and which can be easily compared between neural networks.

There would be lots of technical hand-waving required… and lots of pruning too, given the combinatorial nature of the dataset. E.g., perhaps we’d need to filter pathways via minimum threshold of feedback loops. Fully appreciating the benefits, drawbacks, and process of this transform would take much more mathematics and expertise with complex topologies than I have. But I believe it could be an approach that drastically reduces the complexity of a neural network while still preserving many important network properties, and enables comparative frequency analysis on the network.

Ultimately I think the best way to understand large neural network will not be in terms of neuron connection statistics, but in terms of the frequencies and rhythms the network can generate and support. To go a bit further, I believe this structural transform also makes it easier to quantify resonance inside neural networks (and treat a brain region as an acoustic chamber, in which certain patterns resonate much more strongly than others), something I think is very important.

*A little more on the complex topology and state of neural networks: neural networks have ‘state’ in that each neuron is a unique device with its own internal variables. We can’t understand exactly how the network works, and what frequencies and rhythms it can support, unless we can decipher the internal settings of each neuron in the network (and this changes over time). Contrast this with sound, where the signal medium doesn’t have state.

Likewise, neural networks have ‘complex topology’. Sound waves travel in straight lines in three dimensions (“simple/euclidean topology”). However, signals in neural networks travel along the connections between neurons, which are definitely not arranged in neat, straight lines. It’s more like high-dimensional, non-euclidean space, an “Alice in Wonderland” style area where things don’t move in straight lines so much as between a tangled web of nodes. This is NOT to say that there is NO frequency to be analyzed, nor that other elements of audio theory (resonance, harmonics, constructive/destructive interference) aren’t present. There is and they are. It’s just that we need to find SOME way to simplify the topology before we can start to quantify the frequency data and apply concepts from audio theory. And if we ignore the problem and don’t bother with this sort of simplification/normalization, we’ll never get good frequency data, period.

At any rate, this is a possible approach, and it may or may not end up workable. But however we enable frequency analysis inside the brain, I hope more people start thinking seriously about the normalization problem soon. Frequency is of central importance in neural function, and having a method to normalize frequency within the brain will be central in understanding how it all works.

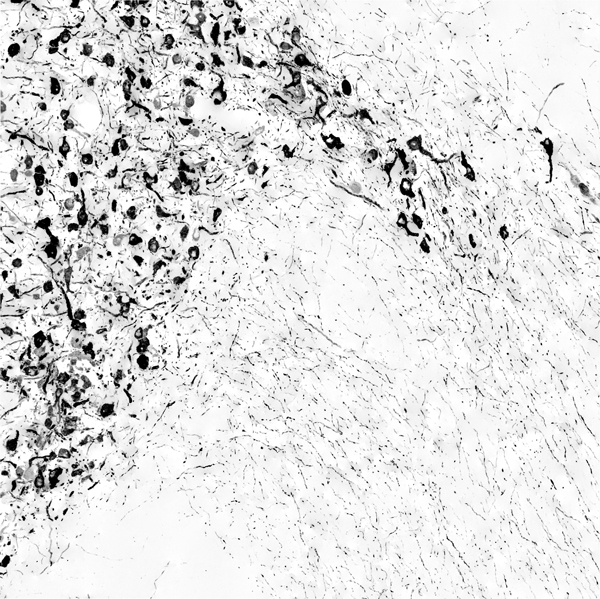

Image: Neurons from the limbic area of the Central Nervous System. Image copyright and courtesy of Bryan W. Jones.

[1] I’m reminded of this curmudgeonly letter regarding the ‘omics’ situation:

It is an old maxim that if you want to get on, invent a new word for your particular niche in an old area, and so become an instant expert. This process seems to have gone mad. A recent article in The Scientist that referred to “nutri genomics” [1] prompted me to see just how many -omics had now been coined. Well over 100 neologisms are listed at http://www.genomicglossaries.com/content/omes.asp. A few of the more ghastly examples are foldomics, functomics, GPCRomics, inomics, ionomics, interactomics, ligandomics, localizomics, pharmacomethylomics and separomics. None of these refers to areas of work that did not exist before the coining of the new word. Perhaps, as an electrophysiologist working on recombinant ion channels, I should dub myself an expert on ohmomics.

This habit of coining fancy words for old ideas might be thought harmless, merely a source of endless mirth for thinking scientists. I’m not so sure though. Apart from reinforcing the view of scientists as philistine illiterates (at least when it comes to etymology), actual harm is done to science as the public becomes aware that some among us seem to prefer long words to clarity of thought.

David Colquhoun

University College London

One thought on “Connectomics, and An Approach to Frequency Normalization”

Comments are closed.