Chatting with people at a recent conference on consciousness (TSC2018), I had the feeling of strolling through an alchemist’s convention: lots of optimistic energy & clever ideas, but also a strong sense that the field is pre-scientific. In short, there was a lot of overly-confident hand-waving.

But there were also a handful of promising ideas that stood out, that seemed like they could form at least part of the seed for an actual science of qualia; something that could transform the study of mind from alchemy to chemistry. Today I want to list these ideas, and say a few things about their ecosystem.

I. Key pieces of the formalization puzzle

Giulio Tononi’s Integrated Information Theory (IIT) is the leading (and perhaps the only) fully formal theory of consciousness. It’s essentially a mathematical method for measuring how much “integrated information” is embedded in a system, or in other words, how much each part of a system ‘knows about’ the other parts. IIT argues this is an identity relation, such that the amount of integrated information a system encodes is equal to the amount of consciousness it has.

IIT is enormously controversial, and to some extent a work-in-progress, but the theory does three very clever things that I think are under appreciated: first, it uses this idea of integrated information to naturally determine the boundary of conscious systems (and thus the boundary of phenomenology): if including an element increases the total integrated information (Phi), then it’s inside the boundary; if it doesn’t, it’s not. I.e., IIT solves many different kinds of problems with a single underlying mechanic. Second, it introduces the idea of Qualia Formalism, that the correct goal for a theory of consciousness is to calculate a mathematical representation of a system’s phenomenology — a central insight that seems obvious once formally expressed, but was not obvious before IIT expressed it. Third, it actually makes falsifiable predictions that have been tested, and passed – something no other theory of consciousness has done.

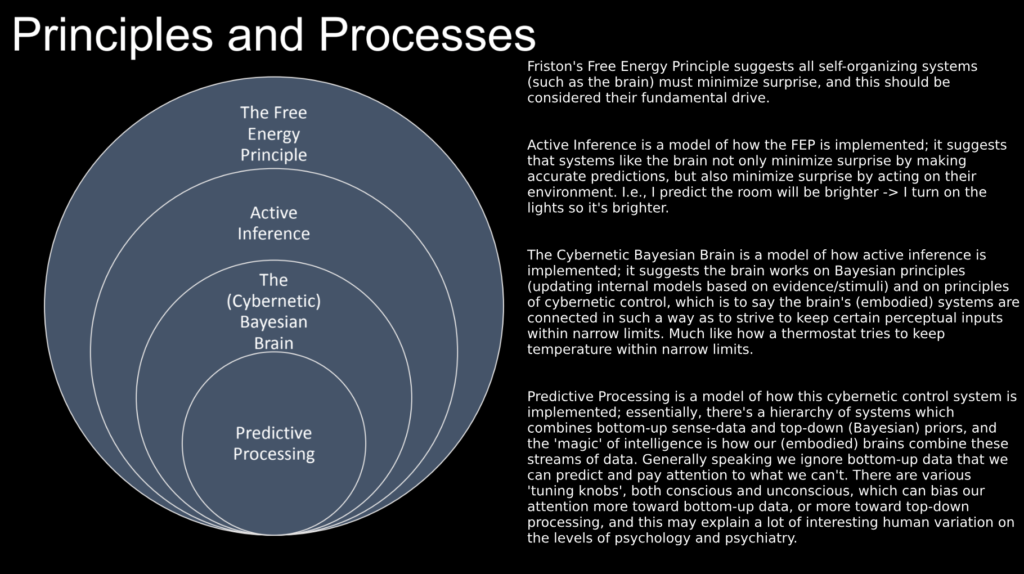

Karl Friston’s Free Energy Principle (FEP) is the leading formal theory of self-organizing system dynamics, one which has (in various guises) pretty much taken neuroscience by storm. It argues that any self-organizing system which effectively resists disorder must (as its core organizing principle) minimize its free energy, that free energy is equivalent to surprise (in a Bayesian sense), and that this surprise-minimization drives basically all human behavior.

The FEP is notoriously difficult to understand*, but it’s also provided a unifying frame for systems neuroscience, where many of the things we’ve laboriously discovered about how the brain works- and common ways it malfunctions- just naturally ‘pop out of’ Friston’s equations.**

*With a nod to Chalmers, I’d pose the meta-problem of free energy: why does trying to understand Friston’s argument that all systems try to minimize their free-energy, so reliably increase our free energy?

**There are some similarities between Tononi’s IIT and Friston’s FEP, which might be due to both being grad students together under Gerald Edelman, who got his Nobel for studying the adaptive immune system and then applied the same principles of self-organization to study the brain. In a substantial sense, IIT and FEP are different extrapolations of Edelman’s “neural darwinism” frame.

Selen Atasoy’s Connectome-Specific Harmonic Waves (CSHW) is a new method for interpreting neuroimaging which (unlike conventional approaches) may plausibly measure things directly relevant to phenomenology. Essentially, it’s a method for combining fMRI/DTI/MRI to calculate a brain’s intrinsic ‘eigenvalues’, or the neural frequencies which naturally resonate in a given brain, as well as the way the brain is currently distributing energy (periodic neural activity) between these eigenvalues. CSHW is a fairly young paradigm, but already a lot of big names (Kringelbach, Carhart-Harris) are onboard.

Why do I think CSHW will be so powerful for talking about phenomenology? First, it seems a priori plausible that systems like brain with significant periodicity will self-organize around their eigenvalues; i.e. these eigenvalues will be functionally significant and ‘costless’ Schelling points. This implies that these harmonics will be a good place to start if we want to efficiently compress a lot of the brain’s (and mind’s) complexity. Second, Atasoy and QRI’s own Emilsson have already offered compelling stories about how differences in the harmonic energy distribution might influence phenomenology; these models are ever-improving, and given someone’s connectome harmonics at two different points in time, I think we can make some reasonably good educated guesses on how the texture of their subjective experience has changed. No other neuroimaging paradigm can claim this at any significant degree of granularity. Third, I suspect the way our brains naturally model other brains is through modeling their connectome harmonics! That in studying CSHW we’re tapping into the same shortcuts for understanding other minds, the same compression schemas, that evolution has been using for hundreds of millions of years. This is a big claim, to be developed later.

Atasoy’s work essentially decomposes brain activity (waves of excitation and inhibition) into its constituent harmonics, such as these. Note that each pattern perfectly repeats over time; they ‘wrap around’ the brain an integer number of times. Image credit: Andrés Gomez Emilsson & Selen Atasoy, et al.

QRI’s Symmetry Theory of Valence (STV) is plausibly the first line in the Rosetta Stone of consciousness: namely, if we have a mathematical representation of a subjective experience (such as the output of IIT), the symmetry of this representation will correspond to the emotional valence of the experience. To put it succinctly: harmony in the brain = pleasure, and this is an identity relation which is universally true, not a ‘mere correlation’.

STV is still a young theory, but it’s generating philosophical clarification (how ‘pleasure centers’ function; how various drugs change our mood; why music is so affectively compelling; why we seek out pleasure) as well as falsifiable predictions (the world’s first method for measuring emotional valence using fMRI built entirely from first principles; tinnitus likely causes hidden affective blunting) with more in the pipeline. I’m admittedly biased, but I’m excited for the road ahead, and view this as the pilot project for reverse-engineering all sorts of qualia.

II. Ecosystem thinking

I think all of the above theories are talking about things that are real and important. To slightly mix metaphors, if everybody doing consciousness research is blind, then at least these paradigms have found the elephant and are each describing a different part of it.

But the ultimate goal here is unification. Combining these different ways of ontologizing and understanding the mind, into new forms of knowledge that have all their collective strengths and none of their individual weaknesses; perceiving the whole elephant. This depends on understanding the relational ecosystem of such theories: what special insight each theory brings to the table, and what tools it might benefit from borrowing from the others.

Here’s my (circa June 2018) take on this:

IIT‘s core insights are that causal microstructure matters for phenomenology and defines a topology, phenomenal systems have boundaries, and knowledge about qualia requires a formalism about qualia. But aside from a bit of exploratory work on animats, it says little about the evolution of integrated information in systems, nothing about embodied minds, little about how large-scale brain dynamics might map to qualia dynamics, and little about what the ‘natural kinds’ of qualia are.

What could IIT borrow from other pieces?

FEP: Principles (multilevel constraints) by which all evolved systems self-organize (i.e. the system-level constraints by which high Phi is selected); also symbol grounding, via the inherent cybernetic/embodied nature of FEP (thanks to Adam Safron for this point).

CSHW: A semi-clean high-level of abstraction which may elegantly proxy certain aspects (emotion & integration?) of phenomenology. (And a much better paradigm than EEG for applying IIT?)

STV: A qualia natural kind, the other half of ethics, another way to test the formalism.

FEP‘s core insights are that surprise minimization is the absolute core directive of all successful self-organizing systems, action & intention & everything in between can be framed as surprise minimization, and that successful systems spend most of their time in a small subset of their possible set of states. However, despite these enormous insights, FEP fails as a theory of phenomenology as it says nothing about phenomenology. It’s also difficult to literally measure free energy, at least in a useful way. Finally, the way the FEP models emotions is clever, but admits of many counterexamples and edge-cases.

What could FEP borrow from other pieces?

IIT: A principled boundary for, and thus a bridge to, phenomenology.

CSHW: A semi-clean high-level of abstraction which tracks & constrains (provides Schelling points for) FE flows.

STV: A phenomenologically-supported expectation of how the brain implements FE gradients algorithmically (symmetry as computational success target); a better model for valence than FE-over-time.

CSHW‘s core insights are that the brain is a system which exhibits significant & semi-discrete resonances, has probably self-organized around its natural resonances, and that changes in the brain’s resonant profile likely track changes in phenomenology. However, CSHW says very little about the functional or phenomenological significance of specific resonances & dynamical interactions between resonances, all of which would be really interesting to know.

What could CSHW borrow from other pieces?

IIT: A place to put knowledge of phenomenology. Much more, if IIT figures out how to calculate Phi from brain harmonics.

FEP: Functional significance of certain CH dynamics (dynamo (free-energy-collecting) harmonics? constraints on what eigenvalues-in-a-FE-minimizing-system-mean?).

STV: A success condition for computation; a clean way to derive valence from CHs.

STV‘s core insights are that symmetry is the first place to look for qualia natural kinds, and most other theories are leaving value on the table by not directly addressing symmetry or valence (and each one of these will lead to the other). But STV alone doesn’t have as grand of a scope as the other theories mentioned and says little about their bailiwicks.

What could STV borrow from other pieces?

IIT: A place to put knowledge about qualia (like symmetry/valence).

FEP: A framework for understanding multi-level self-organization towards (and sometimes away from) symmetry.

CSHW: An empirical framework for proxying symmetry-in-phenomenology.

STV is a smaller theory, so it tends to be easy to combine with the others (especially IIT & CSHW). I’m not sure what would be necessary to unify FEP and IIT, FEP and CSHW, or IIT and CSHW. But I’d bet on an eventual Grand Unification of all four eventually, and I suspect this unification will usher in a new era of studying the mind (and thus, perhaps a new era of humanity).

III. Musings about the pursuit of the One True Ontology

One under appreciated thing about each of the architects above is how deeply they live and breathe their ontologies. Much as a primatologist who’s studied dominance hierarchies for 30 years sees everything through that lens, or how a preacher may see the hand of God in everything, the theorists above see reality in terms of their core ontologies. E.g., I have no doubt that Tononi sees everything through the lens of cause-effect clusters, each with their own Phi; Friston sees everything as a Markov blanket (including, I suspect, literally seeing people as walking Markov blankets). And so on. I call this being a ‘One True Ontologist’.

And I think it’s underappreciated how good, necessary, and generative this is. First, any ontology which can satisfyingly explain the brain and the mind might be powerful enough to be the last ontology we need; it might just be Truth-with-a-capital-T. It pays to aim high and look for this. Second, original work at the cutting-edge of these fields is very difficult, and people have to be intensely motivated and emotionally inspired to try. And the strongest creative motivation there is, is the feeling of explaining everything in terms of your Big Idea. Likewise, it takes a Big Idea to break out of current paradigms & equilibriums. So there are selection effects on the frontiers for Big All-Encompassing Ideas. Third, having a Big Idea about the mind that nobody else has is a substantial social advantage.

More on this latter point: we routinely and instinctively employ methods to obfuscate our inner states and intentions; to do so is to be a strategic human in an uncertain world. On the other hand, having a novel (and accurate) method to model minds, especially one that carves its ontology differently from how most people do, is very effective at circumventing most of these social obfuscation methods people instinctively toss up. You get a piercing understanding of people. I don’t want to give away any secrets, but I think all of the four theories above are worth studying even from just this perspective.

At any rate, I think chasing the One True Ontology is a worthwhile goal.

Of course, to truly win the ontology game, one has to eat (prove your ontology can maintain consistency with, and ideally derive & improve on the predictions of) the current king, which is physics. More on this later.

One thought on “Seed ontologies”

Comments are closed.